1. Data & Distributions¶

Machine learning, by definition, is the art of making computers more intelligent using data. Thus, it’s natural for us to begin our discussion of machine learning with data itself –it’s what we use to make decisions and develop reasoning about the world. However, for machine learning to work both theoretically and in practice, we need to make strong assumptions about our data. This section will be about how we formally describe data in machine learning, and additionally provide a brief background on probability theory.

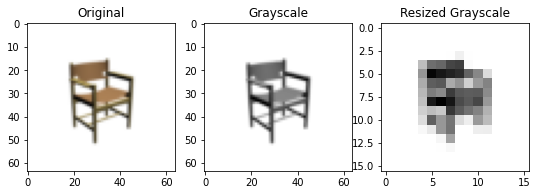

Before we can teach computers, we need to be able to provide them data in a representation they understand. For example, data can be in the form of images, text, or almost anything else, but computers operate exclusively in the world of numbers. The first step to machine learning is taking your data and converting it into numerical form. While we will quickly provide a few examples, there’s a lot of depth to this topic as it can be key to getting good performance from machine learning algorithms.

As numbers:

[255 255 255 255 255 255 255 255 255 255 255 255 255 255 255 255]

[255 255 255 255 255 255 255 255 255 255 255 255 255 255 255 255]

[255 255 255 255 255 255 255 255 255 255 255 255 255 255 255 255]

[255 255 255 255 255 255 255 254 245 254 255 255 255 255 255 255]

[255 255 255 255 213 163 158 137 133 252 255 255 255 255 255 255]

[255 255 255 255 188 97 108 115 121 156 179 232 255 255 255 255]

[255 255 255 255 202 153 149 201 187 224 191 177 255 255 255 255]

[255 255 255 255 209 172 180 131 149 152 151 181 255 255 255 255]

[255 255 255 255 214 109 93 107 141 150 138 190 255 255 255 255]

[255 255 255 255 224 213 220 128 151 165 177 206 255 255 255 255]

[255 255 255 255 233 154 189 167 232 244 191 212 255 255 255 255]

[255 255 255 255 250 241 189 166 255 255 244 243 255 255 255 255]

[255 255 255 255 255 255 249 197 255 255 255 255 255 255 255 255]

[255 255 255 255 255 255 254 250 255 255 255 255 255 255 255 255]

[255 255 255 255 255 255 255 255 255 255 255 255 255 255 255 255]

[255 255 255 255 255 255 255 255 255 255 255 255 255 255 255 255]

Above is an example of possible preprocessing steps to take some real world data (images), and convert into a representation we can easily use for machine learning. The image is first converted to grayscale, then downsized and ultimately represented numerically as a matrix of integers each giving a color intensity. Though how data is converted into a numerical representation may vary greatly depending on the type of data, such numerical representations allow us to build mathematical models.

In machine learning, we make the strong assumption that all of our data is derived from a probability distribution, usually denoted \(p_{data}\). It’s OK if you don’t know what that means yet. It turns out that making this assumption, that there exists some mathematical density function over all of our data, lets us formulate a rigorous mathematical backbone for making decisions from data. First, however, it’s crucial to have a basic understanding of probability theory.